Interpolating between Images with Diffusion Models

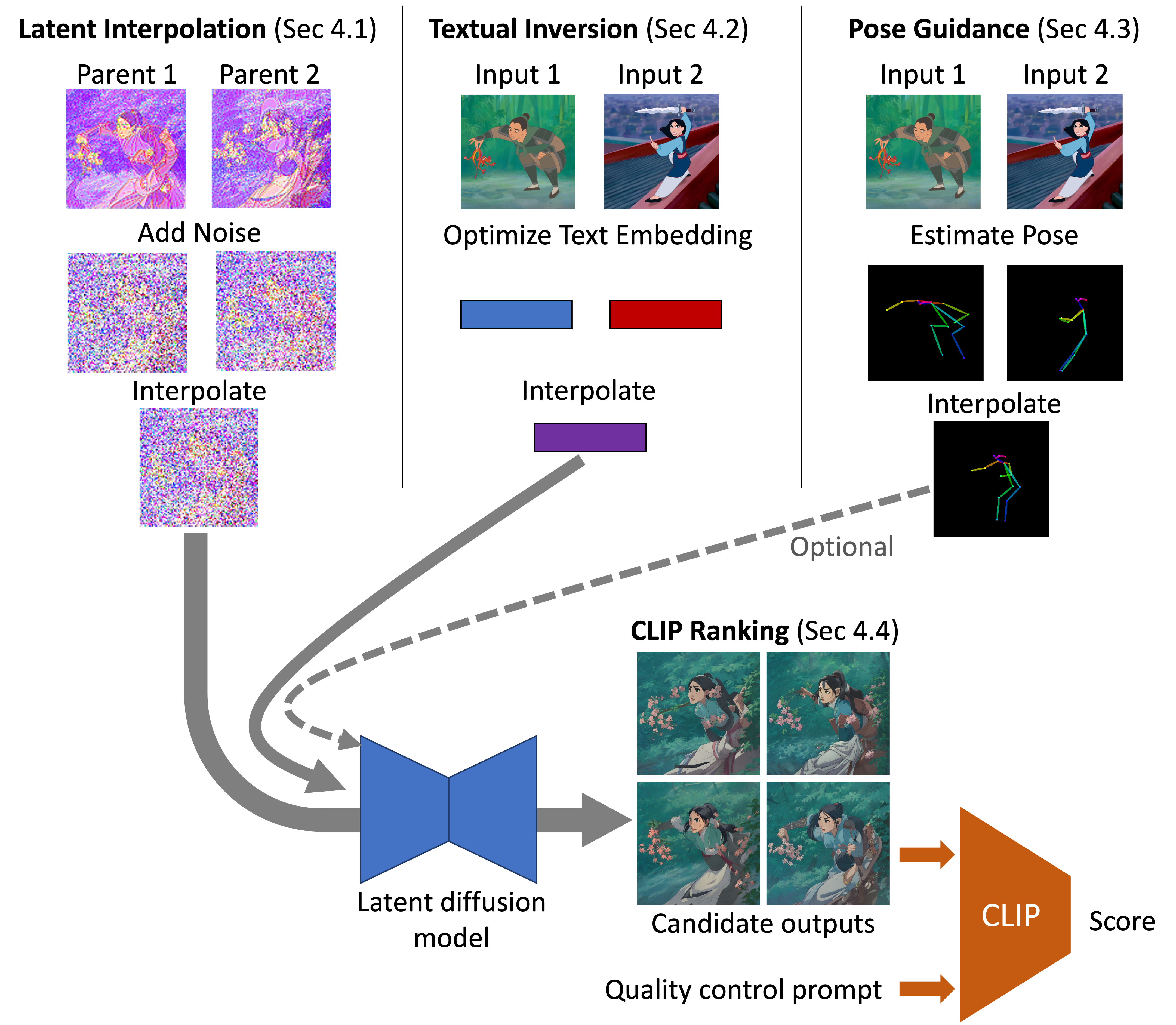

One little-explored frontier of image generation and editing is the task of interpolating between two input images. We present a method for zero-shot controllable interpolation using latent diffusion models.

We apply interpolation in latent space at a sequence of decreasing noise levels, then perform denoising conditioned on interpolated text embeddings derived from textual inversion and (optionally) subject poses derived from OpenPose. For greater consistency, or to specify additional criteria, we can generate several candidates and use CLIP to select the highest quality image.

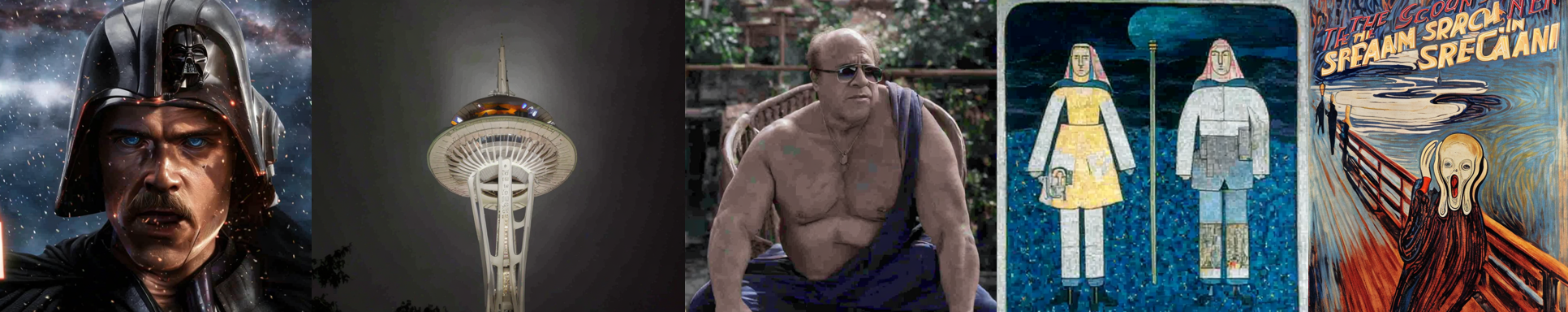

We obtain convincing interpolations across diverse subject poses, image styles, and image content.

Paper presented at ICML 2023 Workshop on Challenges of Deploying Generative AI. Read our paper for more details, or check out our code.

Bibtex

@misc{wang2023interpolating,

title={Interpolating between Images with Diffusion Models},

author={Clinton J. Wang and Polina Golland},

year={2023},

eprint={2307.12560},

archivePrefix={arXiv},

primaryClass={cs.LG}

}